Kubernetes Networking

Networking Terms

- CNI conceptually enables you to do is to add containers to a network as well as remove them

- Within a pod there exists a so-called _infrastructure containe_r. This is the first container that the kubelet launches, and it acquires the pod's IP and sets up the network namespace. All the other containers in the pod then join the infrastructure container's network and IPC namespace. The infra container has network bridge mode enabled and all the other container in the pod join this namespace via container mode.

- iptables/ip6tables/hairpinMode

k8s对集群的网络要求

- 所有容器都可以不用NAT的方式同别的容器通信。 All containers can communicate with all other containers without NAT

- 所有节点都可以在不用NAT的方式下同所有容器通信,反之亦然。 All nodes can communicate with all containers (and vice-versa) without NAT

容器的地址和别人看到的地址是同一个地址。 The IP that a container sees itself as is the same IP that others see it as

4. Given these constraints, we are left with four distinct networking problems to solve:Highly-coupled Container-to-Container networking,this is solved by pods and

localhostcommunications whose network is provided by pause container (pause容器)- Pod-to-Pod networking, please refer to Cluster Networking, this is solved by Kubernetes network model (CNI plugin and Project Calico, for example)

- Pod-to-Service networking,this is covered by services and managed by kube-proxy.

- Internet-to-Service networking, this is covered by services and managed by kube-proxy.

will spin up a HTTP(S) Load Balancer for you : 将为您启动HTTP(S)负载均衡器

Kubernetes does not use the standard docker bridge device and in fact “cbr” is short for “custom bridge.”

Kubernetes节点上运行着一些"pause"容器,它们被称作“沙盒容器(sandbox containers)",其唯一任务是保留并持有一个网络命名空间(netns),该命名空间被Pod内所有容器共享。通过这种方式,即使一个容器死掉,新的容器创建出来代替这个容器,Pod IP也不会改变。这种IP-per-pod模型的巨大优势是,Pod和底层主机不会有IP或者端口冲突。我们不用担心应用使用了什么端口。

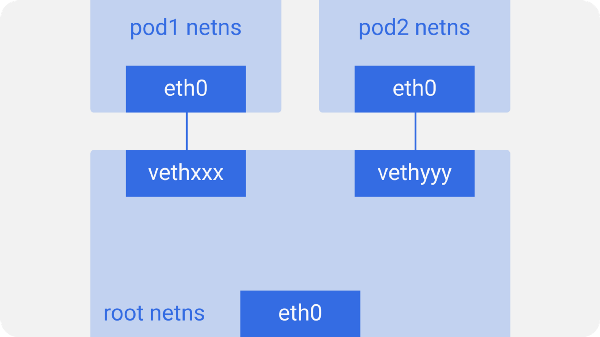

在每个Kubernetes节点(本场景指的是Linux机器)上,都有一个根(root)命名空间(root是作为基准,而不是超级用户)--root netns (root network namespace)/ 最主要的网络接口

eth0就是在这个root netns下。

**Kubernetes Node\(pod network namespace\)**类似的,每个Pod都有其自身的netns,通过一个虚拟的以太网对连接到root netns。这基本上就是一个管道对,一端在root netns内,另一端在Pod的nens内。

Virtual Extensible LAN (Local Area Network) (VXLAN): 虚拟可扩展局域网/network outrage: 网络中断

Kube-Proxy with iptables mode vs. IPVS mode

Project Calico like mechanism with pure L3 routing without having any NAT and bridges. This allows to preserve source IP and security policies ingress can be applied adequately based on source IPs

CNI concerns itself only with network connectivity of containers and removing allocated resources when the container is deleted CNI仅关注容器的网络连接并在删除容器时删除分配的资源

CNI is now become an integral part of Kubernetes, silently doing it’s job of connecting the pods across different nodes and being well adaptive in integrating different kind of network solutions (overlays, pure L3, etc)---CNI现在已经成为Kubernetes的一个组成部分,默默地做着跨不同节点连接pod的工作,并且在集成不同类型的网络解决方案(覆盖、纯L3等)方面具有很好的适应性。

So what is CNI? To put it succinctly, it is an interface between a network namespace and network plugin. A container runtime (eg. Docker, Rocket) is a network namespace. Network Plugin is the implementation that follows the CNI specification to take a container runtime and configure (attach, detach) it to the network, that the plugin implements. 那么什么是CNI?简而言之,它是网络名称空间和网络插件之间的接口。容器运行时(如Docker、Rocket)是网络命名空间。网络插件是遵循CNI规范来获取容器运行时并将其配置(附加、分离)到插件实现的网络的实现

At a very high level, every CNI plugin is just a binary and a configuration file installed on K8s worker nodes. When a pod is scheduled to run on a particular node, a local node agent (kubelet) calls a CNI binary and passes all the necessary information to it. That CNI binary connects and configures network interfaces and returns the result back to kubelet. The information is passed to CNI binary in two ways - through environment variables and CNI configuration file.

The Container Network Interface (CNI) sits in the middle between the container runtime and the network implementation. Only the CNI-plugin configures the network. What is CNI doing? When a pod is added to a node, a CNI plugin is called on to do 3 basic things:

- Create eth0 inside the pod's network namespace;in other words, connect the pod-somehow-to the network

Allocate the PodIP; Usually from the PodCIDR

Make this PodIP reachable by the whole cluster

在iptables模式下,kube-proxy使用了iptables的filter表和nat表,并且对iptables的链进行了扩充,自定义了KUBE-SERVICES、KUBE-NODEPORTS、KUBE-POSTROUTING和KUBE-MARK-MASQ四个链,另外还新增了以“KUBE-SVC-”和“KUBE-SEP-”开头的数个链。

Underlay network: bridge,macvlan,ipvlan

the way it shapes up the network fabric : 它塑造网络结构的方式/High rate of churn or change in the network: 网络中的高流失率或变化率

iptables is a widely used firewall tool that interfaces with the kernel’s netfilter packet filtering framework : iptables是一种广泛使用的防火墙工具,可与内核的netfilter数据包过滤框架进行交互<iptables rule-->规则链>

As packets progress through the stack, they will trigger the kernel modules that have registered with these hooks : 当数据包通过网络栈时,它们将触发已向这些挂钩注册的内核模块

IPVS is built on top of the Netfilter; For 2.4.x kernels (and beyond), LVS was rewritten as a netfilter module, rather than as a piece of stand-alone kernel code.

Project Calico

Calico uses a pure IP networking fabric to deliver high performance Kubernetes networking, and its policy engine enforces developer intent for high-level network policy management. Calico使用纯IP网络结构来提供高性能的Kubernetes网络,其策略引擎加强了开发人员对高级网络策略管理的意图。

Calico没有使用CNI的网桥模式,Calico的CNI插件还需要在host机器上为每个容器的veth pair配置一条路由规则。CNI插件是Calico与kubernetes对接部分。

In Calico an endpoint is a virtual interface from a workload (container or virtual machine) into the Calico network, and workloads may have more than one endpoint.

Related Blogs

- How To Inspect Kubernetes Networking(Great)

- A Guide to the Kubernetes Networking Model

- Kubernetes Network Links (github)

- Container Networking From Scratch

- 图解Kubernetes网络(一)

- Kubernetes networking deep dive: Did you make the right choice?(Great)

Kube-proxy & IPVS

- kube-proxy mode

- IPVS-Based In-Cluster Load Balancing Deep Dive

- IPVS-based Kube-Proxy for Scaled Kubernetes Load Balancing

- 使用 IPVS 实现 Kubernetes 入口流量负载均衡

- IPVS-based Kube-proxy for Scaled Kubernetes Load Balancing (2018-10)

- Understanding Kubernetes Kube-Proxy 2019

LVS: Running a firewall on the director: Interaction between LVS and netfilter (iptables).

- A Deep Dive into Iptables and Netfilter Architecture

华为云在 K8S 大规模场景下的 Service 性能优化实践

- 图解LVS的工作原理

- How To Inspect Kubernetes Networking

Ingress Resource

- ff

CNI Network Provider

- Choosing a CNI Network Provider for Kubernetes

- Comparative Kubernetes networks solutions

- kubernetes网络之---Calico原理解读 (2018/09)

k8s网络-pod(calico)

How Kubernetes Networking Works – The Basics

Benchmark results of Kubernetes network plugins (CNI) over 10Gbit/s network (2018/11 Great)

Debug Service

- Kubernetes Debug Service

- How To Inspect Kubernetes Networking

- Understanding the network plumbing that makes Kubernetes pods and services work

- Kubernetes Services and Iptables (Great)

- Kubernetes集群node无法访问service:kube-proxy没有正确设置cluster-cidr

- kubernetes service模式分析(Great)

- How to Inspect the Configuration of a Kubernetes Node

- How To Inspect Kubernetes Networking (Great)

- From Confused to Proficient: Three Key Elements and Implementation of Kubernetes Cluster Service (netfilter)

使用 ebpf 深入分析容器网络 dup 包问题